Optimization of order and inventory sourcing decisions in supply chains with multiple nodes, carriers, shipment options, and products

February 2, 2023 • 27 min read

Order sourcing decisions can be defined as the logistics decisions related to how customer orders and internal inventory requisitions are fulfilled: what nodes (warehouses, distribution centers, stores, etc.) the inventory should be sourced from, what carriers should be used to ship it, what shipping options such as priority delivery or airfreight should be chosen, and so on. The quality of order and inventory sourcing decisions directly impacts shipping costs, delivery times, product availability, and other mission-critical business properties and metrics, making it a crucial optimization enabler for many industries, including retail, manufacturing, logistics, and pharmaceutical.

In practice, many order-sourcing problems can be conveniently modeled using mixed integer programs (MIPs). However, these methods are not always feasible in complex environments with multiple distribution centers, carriers, shipment options, and other factors and constraints because of the high problem dimensionality – as we demonstrate in the next sections, even a moderately sized environment can translate into MIP problems with billions of variables.

In this article, we analyze several common sourcing optimization scenarios, develop a relatively general framework for representing sourcing problems, and then evaluate and compare two optimization strategies (MIP solvers and metaheuristic (stochastic) optimization) on problems of different sizes.

Why can sourcing optimization be challenging?

To better understand why sourcing optimization can lead to high-dimensional optimization problems, let us consider several logistics environments, starting with the most basic scenario, and gradually increasing the complexity.

Scenario 1: Multiple carriers

Let us assume a company that sells only one product, has only one source node (e.g. a distribution center), $M$ destination nodes (e.g. consumers), and $N$ carriers the company can choose from to deliver the orders. We further assume that the company needs to deliver $M$ orders, with one order for each destination, and the order for $i$-th destination is associated with product quantity $q_i$.

The carrier is chosen independently for each order (or, alternatively, destination), and the shipping cost for delivering one product unit to $i$-th destination by $j$-th carrier is $c_{i, j}$. This scenario can be conveniently visualized as a graph, but also as a table with $M$ rows and $N$ columns, as shown in the following figure:

In general, it is not necessarily the case that all carriers serve all destinations, and this can be codified by assigning infinite costs to the unavailable carrier-destination combinations. The sourcing optimization problem can then be codified as the following MIP with $M*N$ decision variables $x_{i,j}$:

$$

\begin{aligned}

\underset{x}{\text{minimize}}\quad & \sum_{i=1}^M \sum_{j=1}^N x_{i,j} \cdot c_{i,j} \\

\text{subject to} \quad &\\

& x_{i,j} \text{ are non-negative integers} \\

& \sum_{j=1}^N x_{i,j} = q_i, \quad \text{for all }i

\end{aligned}

$$

In this problem formulation, the loss function corresponds to the total shipping cost, and decision variables are the product quantities $x_{i,j}$ that are delivered to $i$-th destination by $j$-th carrier. The second constraint ensures that the quantities delivered by all carriers to a specific destination add up to the required order quantity $q_i$.

In many environments, it is preferable or necessary to avoid order splits into multiple consignments. Under this assumption, we can switch to binary decision variables where $x_{i,j}$ where $x_{i,j}$ equals one if carrier $j$ is assigned to deliver order $i$, and zero otherwise. The problem specification can then be changed as follows:

$$

\begin{aligned}

\underset{x}{\text{minimize}}\quad & \sum_{i=1}^M \sum_{j=1}^N q_i \cdot c_{i,j} \cdot x_{i,j} \\

\text{subject to} \quad &\\

& x_{i,j} \in {0, 1}\\

& \sum_{j=1}^N x_{i,j} = 1, \quad \text{for all }i

\end{aligned}

$$

In this problem formulation, the loss function corresponds to the total shipping cost, the first constraint ensures that valid binary decisions are made, and the second constraint ensures that exactly one carrier is assigned to each order. This problem is somewhat trivial because it is separable – each order can be optimized in isolation by choosing the least expensive carrier. This can be done without invoking any advanced optimization algorithms.

Scenario 2: Multiple DCs and carriers

Next, let us extend the previous scenario with multiple souring nodes. Assuming that we have $N_{\text{src}}$ souring nodes such as distribution centers (DCs) and $N_{\text{meth}}$ delivery methods such as carriers, we can choose from $N = N_{\text{src}} \times N_{\text{meth}}$ sourcing options for each order. We can visualize this scenario as follows, where the problem can still be represented as a table, but with a larger number of columns:

Assuming that we avoid order splitting, the problem specification can be the same as in the first scenario except that index $j$ iterates over all $N$ combinations of source nodes and delivery methods. This problem statement is also trivial because we independently choose the optimal source-method combination for search order.

Scenario 3: Multiple DCs, carriers, products, and shipping options

In many environments, we have more than one product, and multiple orders with different shipping dates can be placed by a single client. We can represent such multi-product, multi-order environments as a table where each row corresponds to a transportation task which is a combination of a destination node, product (SKU), and delivery date:

Assuming that we have $M_{\text{dest}}$ destination nodes, $M_{\text{prod}}$ products, and $M_{\text{d}}$ dates, the total number of transportation tasks will be $M = M_{\text{dest}} \times M_{\text{prod}} \times M_{\text{d}}$.

We can still use the formal problem specification from Scenario 1, but with the index $i$ iterating over all these $M$ combinations. Finding a solution can still be a trivial problem provided that all variables are optimized independently.

Scenario 4: Capacity constraints

In real-world environments, the decision variables defined in the previous sections are subject to various constraints. For example, source nodes generally have finite capacities, that is, the maximum number of units that can be served on a given date. Assuming such constraints, the problem specification for Scenario 2 would transform into the following:

$$

\begin{aligned}

\underset{x}{\text{minimize}}\quad & \sum_{i=1}^M \sum_{j=1}^N q_i \cdot c_{i,j} \cdot x_{i,j} \\

\text{subject to} \quad &\\

& x_{i,j} \in {0, 1}\\

& \sum_{j=1}^N x_{i,j} = 1, \quad \text{for all }i \\

& \sum_{i=1}^M \sum_{j=1}^N q_i \cdot x_{i,j} \cdot A_{i,j}(l) \le S_A(l), \quad \text{for all }l

\end{aligned}

$$

where $A_{i,j}(l)$ is the indicator function which equals one if the assignment $(i,j)$ is sourced from $l$-th source node and zero otherwise, and $S_A(l)$ is the capacity of node $l$ expressed in product units.

The above constraint fundamentally changes the optimization problem – the decision variables for different orders become interdependent because the capacity constraints are defined for all orders collectively. This means that the problem cannot be divided into multiple order-level problems anymore, and we need to use a fully-fledged MIP solver or some other advanced tool for finding a solution.

If all the transportation options (columns) were characterized by the same transportation time, then we might easily decompose the problem into $M_{\text{d}}$ independent problems, where $M_{\text{d}}$ is the overall number of delivery dates we plan the transportation for. For example, if we have only two delivery dates ($M_{\text{d}} = 2$) 2022-04-17 and 2022-04-18, and the duration of transportation is always 3 days, then we might split all the transportation tasks (all the rows) into $M_{\text{d}} = 2$ groups. The first group, for example, would include transportation tasks (rows) with delivery date 2022-04-17, and all the transportation options would have the same shipment date – 2022-04-14 (3 days earlier than the delivery date). All transportation options in the second group would also have the same shipment date (2022-04-15) and the same delivery date (2022-04-18). It is essential here that shipment dates in such a case do not overlap between different groups. The latter makes the $M_{\text{d}}$ optimization problems mutually independent.

In most real settings the situation is not that simple: various transportation options (columns) are characterized by different transportation times. If we split all the transportation tasks into $M_{\text{d}}$ groups according to the delivery date, then different groups would still have overlapping shipment dates and, thus, they would be linked by common constraints on the capacities $S_A(l)$ of source nodes at particular shipment dates. This makes the easy decomposition of the whole optimization problem into $M_{\text{d}}$ independent sub-problems of lower dimension impossible.

Scenario 5: Container and truck costs

In the previous scenarios, we tacitly assumed that the cost $S_{i,j}$ of transportation of $q_i$ units using $j$th transportation option is simply proportional to the corresponding variable cost $c_{i,j}$: $S_{i,j} = q_i \times c_{i,j}$. In real settings, we often pay some constant cost associated with the transportation “quanta”, like containers, trucks, railway carriages, etc. For example, if the capacity of a single container is 500 units and its constant cost is \$1000, but we loaded only 5 units of the product (1% of the container’s capacity), then we still pay $1000 in addition to the variable cost.

At first glance, such a quantization introduces nonlinearity into the problem formulation: the overall cost $S_{i,j}$ depends now on the amounts $q_i$ of the products to be delivered as a stepwise function, which is essentially nonlinear. Nonlinearity violates the fundamental assumptions of MIP methodology. Fortunately, the problem can still be reformulated as a linear one at the expense of the incorporation of additional variables. The overall formulation of the optimization problem looks as follows.

$$

\begin{aligned}

\underset{x,\ y}{\text{minimize}} \quad & \sum_{i=1}^M \sum_{j=1}^N q_i \cdot c_{i,j} \cdot x_{i,j} + \sum_{k} \sum_{l} \sum_{n} C_{k,l,n} \cdot y_{k,l,n}\\

\text{subject to} \quad &\\

& x_{i,j} \in {0, 1}\\

& \sum_{i=1}^M x_{i,j} = 1, \quad \text{for all }i \\

& \sum_{i=1}^M \sum_{j=1}^N q_i \cdot x_{i,j} \cdot A_{i,j}(l) \le S_A(l), \quad \text{for all }l \\

& \sum_{i=1}^M \sum_{j=1}^N q_i \cdot x_{i,j} \cdot I_{j}(k,l,n) \le y_{k,l,n} \cdot V_{k,l,n}, \quad \text{for all }k,l,n

\end{aligned}

$$

Here we introduced the following new variables and functions:

- $y_{k,l,n}$ is an integer non negative variable – the number of containers required for transportation by $k$th carrier from $l$th source node at $n$th shipment day;

- $C_{k,l,n}$ is the constant cost of a container for $k$th carrier, $l$th source node, $n$th shipment day;

- $V_{k,l,n}$ is the volume of a single container for the containers proposed by $k$th carrier for $l$th source node at $n$th shipment day;

- $I_{j}(k,l,n)$ is an indicator function taking value 1 if the $j$th transportation option implies the use of $k$th carrier, $l$th source node, and $n$th shipment day (and taking zero value otherwise).

It is important to note that two groups of variables are now subject to optimal choice via MIP procedure: $x_{i,j}$ and $y_{k,l,n}$.

General problem structure

Let us now combine all the considerations discussed in the previous sections into one framework. Consider the following problem: $M_{\text{prod}}$ products are to be delivered to $M_{\text{dest}}$ destination points at $M_{\text{d}}$ calendar days. We have here essentially $M = M_{\text{prod}} \times M_{\text{dest}} \times M_{\text{d}}$ individual transportation tasks. Each task is characterized by the quantity $q_i, i = 1, \ldots ,M$.

Suppose also that delivery of each product can be performed using a combination of one of $N_{\text{src}}$ source points and one of $N_{\text{m}}$ transportation methods. Here, the term “transportation method” is used in a broad sense and might include the choice of the transportation company (carrier), the means of transportation (particular type of trucks, railway carriages, etc), and the duration of delivery. Thus, for each of $M$ transportation tasks we have $N = N_{\text{src}} \times N_{\text{m}}$ transportation options. In a more general formulation, we have $M$ transportation tasks, and we have to choose one (and only one) transportation option for each task from $N$ such options available. It is convenient to represent the problem graphically as the following $M \times N$ table:

A general transportation problem: Variable costs

| Transportation tasks: | Transportation options: | ||||||

| Task_ID | Quantity | 1 | 2 | 3 | … | 999 | 1000 |

| 1 | 50 | 7.1 | 10.5 | 7 | … | 10.5 | 11.5 |

| 2 | 30 | 12 | 8 | … | 8.5 | ||

| 3 | 100 | 10 | 5.9 | … | 11 | ||

| 4 | 75 | 7.1 | 8.5 | 8.5 | … | 7 | 11 |

| 5 | 25 | 12 | 7.5 | … | 9 | 8 | |

| … | … | … | … | … | … | … | … |

| 1000000 | 35 | 10 | 10 | 6.5 | … | 8 | 11 |

Figure 1. Formulation of a general transportation problem.

Here, the combinations task+option that are not feasible are marked in gray, and the option chosen for each particular task (row) is marked in red. Each cell contains the variable cost, i.e. the cost per one transportation unit. The costs of all $M$ individual decisions add up to the total cost:

$$

Z = \sum_i^M q_i \cdot c_{i,J(i)}

$$

Here,

- $q_i$ is the quantity of goods to be delivered within the $i$th transportation task (the second column in the above table);

- $c_{i,j}$ is the variable cost of the $j$th transportation option for the $i$th transportation task (the value in the $(i,j)$th cell of the table); and

- $J(i)$ is the index $j$ of the transportation option chosen for the $i$th transportation task.

The goal of the optimization is to choose such a solution vector $[J(1), J(2), \ldots , J(M)]$ that the objective function – usually the total cost Z – is minimal. The brute force approach – when all possible solutions are checked – is not feasible, even for very small $M$ and $N$. This is because the overall number of possible solutions is $N^M$, and even for, say, 100 tasks and 10 options, the number of combinations is described as 1 with 100 zeros.

Without additional restrictions, this problem could easily be solved by a simple greedy search algorithm: for each transportation task, select the transportation option with the minimal cost. In other words, we might simply optimize each task individually. Unfortunately, real problems are much more complex – they normally involve many different constraints, and the overall cost is not necessarily a sum of individual costs. These factors make the individual choices mutually dependable, and the naive approach of individual task optimization is not feasible here.

Real-world problem example

Suppose the problem is to optimize the delivery of 1000 SKUs to 100 retailers for 10 calendar days. For each combination SKU+retailer+day, the delivery can be performed via one of 20 distribution centers (DC) using one of 10 transportation companies (TC), and one of 5 transportation methods proposed by the company. Each transportation method is also characterized by the duration dj of delivery in days. Thus, we have here $M = 1,000,000$ ($=1000 \times 100 \times 10$) transportation tasks and $N=1000$ ($20 \times 10 \times 5$) transportation options.

A graphical representation of the problem is shown in Fig.2, where the first table contains variable costs of individual decisions, and the second one provides necessary details on the transportation options.

Problem description: Table 1. Variable costs (per 1kg)

| Transportation tasks: | Transportation options: | ||||||||

| Task_ID | SKU_ID | Retailer_ID | Delivery Date | Quantity | 1 | 2 | 3 | … | 1000 |

| 1 | 1 | 1 | 2022-04-14 | 50 | 7.1 | 10.5 | 7 | … | 11.5 |

| … | … | … | … | … | … | … | … | … | … |

| 10 | 1 | 1 | 2022-04-23 | 30 | 12 | 9 | 8 | … | 8.5 |

| 11 | 1 | 2 | 2022-04-14 | 110 | 10 | 5.9 | 11 | … | 9 |

| … | … | … | … | … | … | … | … | … | … |

| 1000 | 1 | 100 | 2022-04-23 | 30 | 8 | 9.5 | 6 | … | 7.5 |

| 1001 | 2 | 1 | 2022-04-14 | 75 | 7.1 | 8.5 | 8.5 | … | 11 |

| 1002 | 2 | 1 | 2022-04-15 | 25 | 12 | 7 | 7.5 | … | 8 |

| … | … | … | … | … | … | … | … | … | … |

| 1000000 | 1000 | 100 | 2022-04-23 | 35 | 10 | 10 | 6.5 | … | 11 |

Problem description: Table 2. Transportation options (Details)

| Transportation option | |||||

| 1 | 2 | 3 | … | 1000 | |

| Distribution Center | 1 | 1 | 1 | … | 10 |

| Carrier | 1 | 1 | 2 | … | 20 |

| Transportation Method | 1 | 2 | 1 | … | 5 |

| Duration (days) | 2 | 3 | 1 | … | 3 |

| Constant Cost (per container) | 1000 | 0 | 800 | … | 1200 |

| Container Capacity | 500 | 1500 | … | 600 | |

Figure 2. Formulation of a sourcing optimization problem for a network with multiple SKUs, DCs, and carriers. The top table specifies the variable costs for individual decisions, and the bottom table describes the transportation options.

Here, the last two rows in the second table describe additional constant costs associated with particular transportation options. The additional constant costs are generated by additional “quantization” of the delivery, when an integer positive number of “containers” of particular capacity is used for transportation, and each such container is characterized by a constant cost. For example, even if only one transportation unit is to be delivered using option 1, we still need to use a whole container suitable for up to 500 transportation units, and this adds 1000 units of cost to the overall cost. The “containers” here could be – besides containers per se – other transportation “quanta”, like trucks, railway carriages, etc.

If we assume that there are also constraints on the maximal throughput of each DC at each shipment day, this could be represented graphically as a table shown in Fig.3.

Problem description: Table 3.

Constraints A: Maximal capacity of DCs by shipment day

| Distribution Center ID | Shipment Date | Maximal Volume |

| 1 | 2022-04-10 | 10,000 |

| 1 | 2022-04-11 | 12,000 |

| … | … | … |

| 1 | 2022-04-22 | 14,000 |

| 1 | 2022-04-10 | 8,000 |

| … | … | … |

| 20 | 2022-04-21 | 7,000 |

| 20 | 2022-04-22 | 9,500 |

Figure 3. Formulation of constraints for throughputs of DCs at particular shipment days.

Suppose there are also constraints on the maximal amount of goods that can be transported by a particular carrier from a particular DC on a particular shipment day (see Fig.4)

Problem description: Table 4.

Constraints B: Maximal load of carriers by DC and shipment day

| Carrier ID | Distribution Center ID | Shipment Date | Maximal Volume |

| 1 | 1 | 2022-04-10 | 2,000 |

| 2 | 2022-04-10 | 2,500 | |

| … | … | … | |

| 1 | 20 | 2022-04-10 | 1,800 |

| 1 | 1 | 2022-04-11 | 2,000 |

| … | … | … | … |

| 10 | 9 | 2022-04-22 | 3,000 |

| 10 | 10 | 2022-04-22 | 2,300 |

Figure 4. Formulation of constraints for maximal transportation volume for particular carriers at particular DCs at particular shipment days.

It is important to note here that the existence of the constraints represented by the last two tables makes the naive greedy search algorithm practically unfeasible for this class of problems. Moreover, the different duration of delivery means that the problem cannot be decomposed by days because the shipment dates for different delivery dates overlap.

MIP problem formulation

The application of regular methods of optimization – like linear IP and MIP [1] – requires specification of a linear objective function to be minimized, and a system of linear inequalities or equalities representing the constraints. We represent each individual choice $(i,j)$ as a separate binary variable $x_{i,j}$ which takes only two values: 0 and 1. Here $i$ is the index of the transportation task (row) and $j$ is the index of the transportation option (column). We also impose an additional constraint for each $i$th task (row) that the sum of all $x_{i,j}$ is 1. We also specify other constraints. Then, the optimization problem looks as follows:.

$$

\begin{aligned}

\underset{x,\ y}{\text{minimize}} \quad & \sum_{i=1}^M \sum_{j=1}^N q_i \cdot c_{i,j} \cdot x_{i,j} + \sum_{k} \sum_{l} \sum_{m} \sum_{n} C_{k,l,m,n} \cdot y_{k,l,m,n}\\

\text{subject to} \quad &\\

& x_{i,j} \in {0, 1}\\

& \sum_{j=1}^N x_{i,j} = 1, \quad \text{for all }i \\

& \sum_{i=1}^M \sum_{j=1}^N q_i \cdot x_{i,j} \cdot A_j(l,n) \le S_A(l,n), \quad \text{for all }l,n \\

& \sum_{i=1}^M \sum_{j=1}^N q_i \cdot x_{i,j} \cdot B_j(k,l,n) \le S_B(k,l,n), \quad \text{for all }k,l,n \\

& \sum_{i=1}^M \sum_{j=1}^N q_i \cdot x_{i,j} \cdot I_j(k,l,m,n) \le y_{k,l,m,n} \cdot V_{k,l,m,n}, \quad \text{for all }k,l,m,n

\end{aligned}

$$

Here we use the following notation:

- $x_{i,j}$ are binary (0,1)-variables which take the unit value for chosen row+column combination;

- $y_{k,l,m,n}$ are integer nonnegative variables – the number of containers used by the $k$th carrier for transportation from the $l$th DC by the $m$th method of transportation at the $n$th shipment day;

- $C_{k,l,m,n}$ are the given constant costs of a single container;

- $q_i$ is the quantity of the product to be delivered within the $i$th transportation task (row);

- $c_{i,j}$ is the variable cost of transportation of a single unit for $i$th task and $j$th option;

- $A_j(l,n)$ are indicator functions that take the unit value only if the $j$ transportation option implies the usage of the $l$th DC at the $n$th shipment day;

- $S_A(l,n)$ is the maximal throughput of the $l$th DC at the $n$th shipment day;

- $B_j(k,l,n)$ are indicator functions that take the unit value only if the $j$th transportation option implies the usage of the k-th carrier and the l-th DC at the n-th shipment day;

- $S_B(k,l,n)$ is the maximal transportation volume for the the $k$th carrier at the $l$th DC at the $n$th shipment day;

- $I_j(k,l,m,n)$ are indicator functions that take a unit value only if the $j$th transportation option implies the use of the $k$th carrier, the $l$th DC, the $m$th method of transportation at the $n$th shipment day;

- $V_{k,l,m,n}$ is the volume of the containers used by the $k$th carrier for transportation from the $l$th DC by the $m$th method of transportation at the $n$th shipment day.

Dimensionality of the MIP optimization problem

The major contribution to the overall dimensionality of the MIP-optimization problem originates from the $x_{i,j}$ variables in the formulation of the optimization problem. The number of such variables is $D = M \times N$, where $M$ is the number of transportation tasks, and $N$ is the number of transportation options. In our example above $M = 1,000,000$ and $N = 1,000$; thus the dimensionality $D$ is 1 billion.

Variables $y_{k,l,m,n}$ correspond to combinations carrier + DC + transportation method + shipment day. In our numerical example, we have 10,000 such combinations. It is a tiny fraction of the total number of $x_{i,j}$ variables.

Problem formulation for metaheuristic methods

When solving a high-dimensional MIP is prohibitively expensive, we can attempt to apply a general class of approaches known as metaheuristic (or stochastic) optimization [2]. In contrast to the regular methods of optimization like MIP, these approaches are devoid of mathematical rigor, but they are capable of dealing with high dimensionality in practice. In contrast to the regular approaches, the metaheuristic ones do not guarantee the global optimality of the solution, but they are often able to provide practically valuable reduction of the objective functions (e.g. the total cost) in practically acceptable time.

For metaheuristic methods, the formal formulation of the problem is somewhat different. We consider the overall optimization problem as a purely combinatorial one. For each transportation task, say, for the $i$th one, we select a single integer index $j_i \in {1,2,\ldots,N}$ specifying the chosen transportation option. The objective function to be minimized consists of two essentially different components:

$$

Z(J) = Z_C(J) + \alpha \cdot Z_p(J).

$$

Here,

- $J$ is the solution vector $[j_1, j_2, \ldots , j_M], j_i \in {1,\ldots,N}$;

- $Z_C(J)$ is the total cost corresponding the solution $J$ that is of primary interest (computed in the same way as the problem formulation of MIP above);

- $Z_p(J)$ is the artificially introduced total penalty for violation of constraints; and

- $\alpha$ is a multiplier that affects the overall effect of constraints on the final solution.

We typically use a simple form of the penalty function, where each transportation unit exceeding the given constraint contributes a unit value to $Z_p(\cdot)$. An essential distinction from the problem formulation for MIP is that constraints do not participate explicitly; they are taken into account via the penalty term in the above expression for the objective function.

Solutions

Regular methods (MIP)

The expressions we used in the mathematical formulation of the optimization problem for MIP are rarely used in practice. Depending on the optimization instrument used, the expressions are to be represented by some computer code or formal data description in, say, JSON format. For illustrative purposes, we use OR-Tools from Google so the examples we show below correspond to this instrument.

For illustration, we consider a problem of low dimensionality $D=1,000$ with only $M=100$ transportation tasks and $N=10$ transportation options (5 DCs, 2 carriers, 1 transportation method). For simplicity of presentation, we consider the case of no constant costs associated with “quantization” of transportation into discrete units like containers. This means that the second term in the objective function for MIP – the one containing variables $y_{k,l,m,n}$ – is absent.

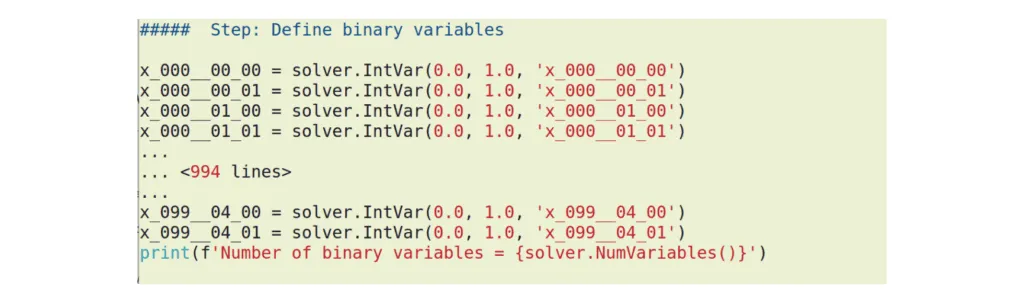

We have used our code generator that automatically produces Python code for OR-Tools aimed at optimization of this class of problems. Fig.5 shows a fragment of the code where 1000 binary variables are specified.

Figure 5. Automatically generated Python code for OR-Tools: definition of 1000 binary variables $x_{i,j}$

These variables are denoted $x_{i,j}$ in the expressions above, and the corresponding Python variables in the code have the form x_AAA__BB_CC, where AAA is the index corresponding to the transportation task, BB – the index of DC, and CC is the index of the carrier.

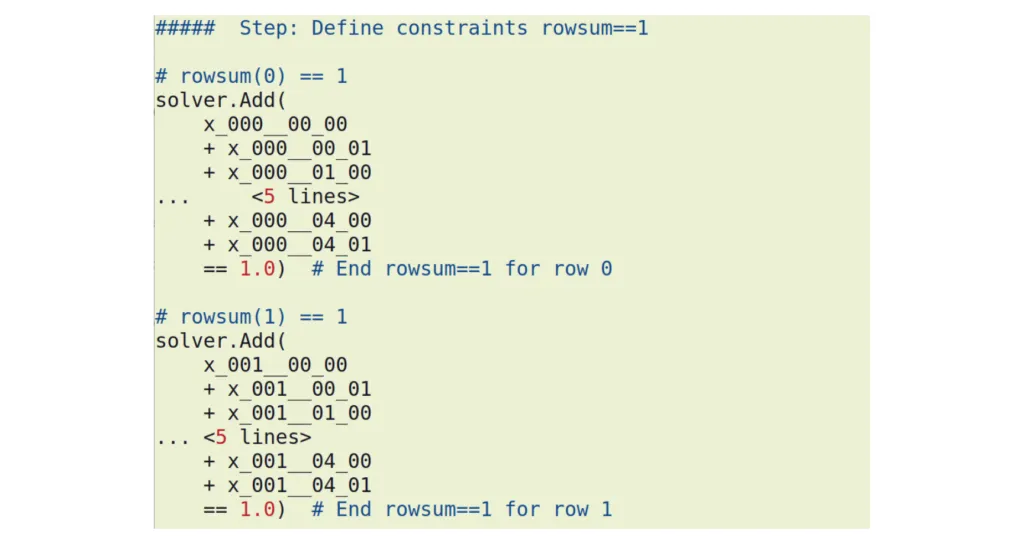

The constraints specifying that only one cell in a row is selected look somewhat different in the code. Fig 6, for example, shows a small fragment of the code with constraints for only 2 rows of 100.

Figure 6. A fragment of automatically generated Python code for OR-Tools: Only two of the 100 constraints specifying the unit value of the sum of $x_{i,j}$ in each row are shown.

Fig.7 shows automatically generated code corresponding to the constraints imposed on the maximal throughput of DCs at particular shipment days.

We show the code only for illustrative purposes. Such automatically generated code is not supposed to be read by humans. Even for the problem of low dimensionality as D=1000, there are more than 6800 lines of code. In our solutions, such code is usually only an intermediate artifact in the overall workflow.

At the next stage, the code is simply executed by a Python interpreter. For dimensionality of a few thousand variables, OR-Tools produce the solution almost instantaneously, at least for this type of problem. The output typically contains the solution vector with values of the variables, and the value of the objective function representing the globally optimal solution (see Fig.8).

Metaheuristic approaches: Simulated annealing

For this type of problem – where dimensionality is too high for regular methods – we usually use metaheuristic methods constructed on the basis of the methodology of simulated annealing [2, Ch.11]. This class of methods rests on ideas borrowed from statistical physics, namely, Boltzmann distribution:

$$

P(x_n) = \frac{exp(-E(x_n) / kT)}{\sum\limits_i exp(-E(x_i) / kT)}.

$$

The formula states that the probability $P(x_n)$ that a physical system in thermodynamic equilibrium can be found in the state $x_n$ is proportional to the exponential term $exp(-E(x_n) / kT)$.

Here,

- $E(x_n)$ is the energy of the state;

- $x_n$, $T$ is the absolute temperature; and

- $k$ is the Boltzman constant.

It is essential that:

- the lower the energy of the state, the greater its probability; and

- the lower the temperature, the stronger the difference in probabilities.

For close to zero temperature the state with the minimal energy has probability close to 1.0.

An important peculiarity of the last expression is that the probabilities of the states $x_n$ depend only on the difference between their energies $E_n$. In other words, if you add an arbitrary energy $\Delta E$ to all $E_n$, then the probabilities of the states do not change.

Simulation of the physical process of annealing means that we simulate the system starting from a relatively high temperature T, and then gradually decline the temperature to a close to zero value. Such a gradual reduction of the temperature facilitates escaping local minima, and increases the probability of achieving the global minimum.

The metaphor of simulated annealing for our problem could be specified by the following modification of the last expression:

$$

P(J_n) = \frac{exp(-Z(J_n) / T)}{\sum\limits_{i \in \Omega} exp(-Z(J_i) / T)}, n \in \Omega.

$$

Here,

- $P(J_n)$ is the probability of choosing the decision vector $J_n$;

- $Z(J)$ is the value of the objective function for the decision $J$ expressed in the units of cost;

- $T$ is the “temperature” expressed in the units of cost; and

- $\Omega$ is a subset of possible decisions chosen for comparison.

In traditional simulated annealing, $\Omega$ includes only two randomly chosen solution vectors; one of which corresponds to the current state. We often deviate from this rule and choose a wider set; for example, all N possible vectors differing by a single component, i.e. by a single row.

An optimization session consists of a series of consecutive steps, where each step includes:

- a random choice of the set $\Omega$ of solutions-candidates;

- a calculation of the probabilities $P(J_n)$ for each element $J_n$ of $\Omega$ according to the last expression; and

- a random choice from $\Omega$ according to the calculated probabilities $P$.

We usually split all the steps of the process into iterations, where a single iteration implies that all rows (transportation tasks) have been processed, on average, once. We usually keep all parameters constant during one iteration. An essential detail is that, besides the temperature $T$, we also change parameter $\alpha$ in the expression for objective function: smaller values of $\alpha$ correspond to higher tolerance with respect to violation of constraints. Other parameters could also be subject to gradual changes during the optimization session.

A general rule of thumb is to start from loose constraints (low values of $\alpha$) and high temperature, and then gradually reduce temperature $T$ and increase parameter $\alpha$. The choice of a good optimization strategy requires experimentation and experience. The process is essentially stochastic. At least several sessions differing by the seed values of the generator of pseudorandom numbers are normally performed. For complex enough problems, the process usually converges to different solution vectors depending on the seed-values.

The efficiency of metaheuristic algorithms for a low-dimensional case

In contrast to regular optimization methods like MIP, metaheuristic algorithms are not guaranteed to achieve the global minimum. A question of practical interest is to what extent these algorithms are efficient, i.e. how closely they are capable of approaching the globally optimal solution.

To estimate the efficiency of the metaheuristic approach we used a synthetic dataset with dimensionality D=1000, namely, with M=100 transportation tasks and N=10 transportation options. Firstly, we generated Python code for OR-Tools and executed it. The value of the objective function that OR-Tools produced, i.e. the global minimum, is 0.158359 million USD. Secondly, we ran 7 random optimization sessions using our metaheuristic algorithm, with different initial values of the seed of the pseudorandom numbers generator controlling the optimization.

It is hardly reasonable to start here from randomly selected solutions because they will almost certainly violate some constraints. Therefore, we started from a random optimization session aimed only at satisfying the constraints. All the variable and constant costs were set to zero value to make the objective function depend only on the penalty term. A random solution obtained this way, i.e. satisfying all constraints, was then used as the initial vector value $J_0$ for the optimization process itself. This starting point, on one hand, satisfies all the constraints. On the other hand, the state has been achieved without taking the costs into account and, thus, has not been optimized with respect to the costs in any way.

At least two parameters were changed in the course of the optimization process: the “temperature” $T$ and parameter $\alpha$ determining the strictness of the constraints. Optimization tracks, i.e. the values of the total cost $Z$, achieved at each iteration are shown in Fig.9.

The global minimum obtained via MIP-solver in OR-Tools is shown as the black horizontal line. It is necessary to take into account that all constraints are guaranteed to be satisfied only at the initial iteration 0 (because this state was achieved via constraints-only optimization), and at the last iteration #39. This explains why the total cost at some iterations is below the global minimum (iteration #21) or very close to it (iteration #1). We used a two-cycle scenario when parameters change gradually and monotonically within a cycle, and they switch abruptly between the cycles. This explains the two-maxima shape of the curves. The concrete values of the total cost achieved in various random sessions are shown in Table 1.

| Optimization session | The minimal cost (min. USD) |

| OR-Tools (the global minimum) | 0.158359 |

| Session 1 | 0.163723 |

| Session 2 | 0.165343 |

| Session 3 | 0.163007 |

| Session 4 | 0.166876 |

| Session 5 | 0.162972 |

| Session 6 | 0.167403 |

| Session 7 | 0.165001 |

Table 1. The minimal cost achieved in various sessions

It is clearly seen from the results in Fig.9 and Table 1 that the metaheuristic algorithm does not achieve the global minimum of the cost exactly. On the other hand, the algorithm is still capable of approaching the minimum quite closely (as compared to the starting values achieved by constraints-only optimization). It is not clear to what extent this conclusion could be extrapolated to high dimensionality D like millions or billions of variables because we see no practical method to know the global minimum for cases of that high dimensionality.

Execution time

Strict comparison of execution time for MIP and metaheuristic algorithms in general is hardly possible. For both classes of optimization algorithms, the execution time is strongly dependent on the implementation of the algorithm, and on the data properties. Moreover, the metaheuristic algorithms – in contrast to the regular ones – allow quite an arbitrary choice of the number of iterations. Often,but not always, the regular algorithms are faster.

We performed a series of experiments with different dimensionality of the data for both algorithms we used above: MIP (OR-Tools, Python interface), and tailormade metaheuristic algorithm (C language). The dependence of the execution time on dimensionality of the optimization problem is shown in Fig.10.

For this particular case, the metaheuristic algorithm is faster. It is also noticeable that execution time of the metaheuristic algorithm is approximately proportional to the dimensionality, while the execution time of the MIP-algorithm tends to grow faster than linearly with the increase of dimensionality.

Does the objective value gap depend on the dimensionality?

The relative gap between the minimal cost achieved by the regular (MIP), and metaheuristic (simulated annealing) approaches to optimization can depend on the dimensionality. The question is of practical interest whether the gap tends to change in any direction when the dimensionality increases.

We have performed a series of experiments with different values of the seed of the pseudorandom numbers generator controlling the process of simulated annealing. The results are presented in Fig.11.

It is hardly possible to conclude any clear trend from Fig.11. We definitely cannot generalize this observation for higher dimensionality or other datasets. Nevertheless, we might conclude that, even if such a trend exists, it is not a universal phenomenon.

High dimensionality is a challenge for regular methods

If the data dimensionality is low enough, MIP-solvers like OR-Tools are definitely preferable as compared to metaheuristic algorithms at least on two grounds:

- the regular algorithms are capable of achieving the global minimum;

- the regular algorithms work much faster.

Unfortunately, even moderately high dimensionality of the optimization problem results in the inability of the regular algorithms to provide a feasible solution, at least within a reasonable time. For example, when we increased the dimensionality of the problem from D=2000 to D=3000, the Python compiler failed on the automatically generated code with message: “RecursionError: maximum recursion depth exceeded during compilation”. It is worth noting that this error occurred in the compilation stage, not during execution of our automatically generated code for OR-Tools. This means that the limit cannot be overcome by a simple increase in the size of the Python runtime stack (maximum recursion depth).

By no means do we claim here that this concrete dimensionality boundary has any universal meaning and reflects some limitations of the underlying engine. The boundary is likely to be due to some peculiarity of the Python compiler itself and it is likely to change if we use other programming interfaces available for OR-Tools, e.g. C++ or C#. Nevertheless, in our experience, methods of regular optimization like MIP are prone to fail when dimensionality of the optimization problem becomes large enough.

Further complications: Optimal container and vehicle utilization

To illustrate the capabilities of the metaheuristic approach, we generated a synthetic dataset for more complex settings: when the transportation is performed using “containers” contributing additional constant costs to the overall cost to be minimized. Such a problem provides an additional criterion for illustration of workability and practical usefulness of the metaheuristic approach.

If we do not include the “quantization” in the problem definition, then we get a final solution where a great fraction of the containers are filled only partially. At the same time, a practically desirable solution would have resulted in a high filling factor, say, 0.8-0.9 and more. The distribution of the filling fraction of underfilled containers for the initial vector is shown on the top panel of Fig.12 as a histogram. The bottom panel shows the histogram after optimization using our metaheuristic algorithm.

The positive effect of optimization is clearly noticeable from Fig.12: the distribution has shifted radically rightwards in the course of optimization, i.e. towards a much higher filling fraction of the containers. It is easier to understand the degree of the improvement quantitatively if you look at the percentiles of the two distributions – before and after optimization – in Table 2 below.

| Percent | 1% | 10% | 25% | 50% | 75% | 90% | 99% |

| Before optimization: | 0.0084 | 0.12 | 0.27 | 0.49 | 0.74 | 0.88 | 0.994 |

| After optimization: | 0.43 | 0.73 | 0.85 | 0.93 | 0.97 | 0.99 | 0.999 |

Table 2. Percentiles of the filling fraction for underloaded containers

We can see from Table 2, for example, that the 25-th percentile (column “25%”) after optimization increased from 0.27 to 0.85. Technically, the 25-th percentile is such a value of the filling fraction that 25% of underloaded containers have lower values and, respectively, 75% of the containers have higher values. In other words, after optimization, three quarters (75%) of the containers are filled at least by 85% (0.85) of their nominal capacity, while before optimization this fraction was only 27% (0.27).

Insightful representations for analytics

Besides the mere optimization process, our solutions normally provide a range of additional options for analysis of the situation at large. For example, the mere fact that the solution satisfies all the constraints is often not sufficient in practice. It is desirable to identify the bottlenecks, to reveal hidden reserves, etc. We found that such a simple metric as the ratio of the actual throughputs to their maximally allowed values (constraints) has proven to be very useful in practice, especially if presented in a form convenient to managers and analysts.

In Fig.13, for example, we visualized the ratio actual/maximal for a 2D table representing the throughputs for 5 regional DCs at 10 consecutive calendar shipping days. It is clear that the regional DCs #1 and #3 operate close to their maximal capacity most days, when the actual/constraint ratio is 1.0. At the same time, DC #5 is definitely underused.

Other constraints might require more complex visualizations. For example, the constraints on the capabilities of particular carriers at particular DCs and particular shipment dates (see Fig.4) imply a 3D table: carrier, DC, shipment day. Various 2D slices of the table bear valuable information. Therefore, in our solutions, we provide an opportunity for the user to select the desired variant of visual representation.

Computational complexity of the metaheuristic solutions

To reduce the computational time necessary for optimization, we usually use low-level “fast” programming languages, like C/C++, and optimized libraries at the heart of the solutions. This makes it possible to achieve practically valuable results in acceptable time even for high-dimensional problems.

For example, the optimization problem we described above was tested for the case of 1 billion binary variables: 1 million transportation tasks x 1000 transportation options for each task. Such a high dimensionality might arise even for moderately sized businesses as we showed in subsection 1.2. One iteration of our algorithm required 150 seconds on a middle-tier business notebook. Typically, 5-20 such iterations are enough for practically valuable optimization. Thus, the overall duration of an optimization session for the problem of this dimensionality is 5-60 minutes, which is acceptable for most applications.

The metaheuristic algorithm is typically characterized by an approximately linear dependence of the computation time of one iteration on the effective dimensionality (expressed in the number of binary variables necessary for MIP and related regular algorithms).

Discussion

Comparison of regular and metaheuristic methods of optimization

Mathematical rigor. The methods of regular optimization normally rest on a firm mathematical background. This allows us to find globally optimal solutions or at least to decide whether a particular solution is really globally optimal. In contrast, metaheuristic approaches normally achieve reasonable reduction of the objective function (e.g. the total cost), but they cannot guarantee achievement of the global optimum or at least help to decide if the given solution is the global optimum or not.

Availability of solvers. If methods of regular optimization are suitable for the problem at hand, we may use readily available solvers. Such solvers are available for LP, MIP, and related techniques. Examples of such solvers are Google OR-tools (free, open source) and Gurobi (commercially available). In contrast, metaheuristic algorithms are implemented ad hoc, for each particular case separately. Typically we use low-level languages like C/C++ to program the whole business logic into a rapidly computable objective function. The strategy of minimization of this objective function also requires some domain knowledge and experimentation for new datasets.

Reliability. In most business settings, we should be sure that the solution will be provided within some pre-specified time interval, say in 10 minutes or in 5 hours. The algorithms of regular optimization may fail here in two respects: either the solution is not achieved in due time, or the iterations do not converge. For LP and MIP problems of large enough dimensionality, the computation time is often not well predictable, and it may depend essentially on the data itself. In contrast, most metaheuristic algorithms provide noticeable minimization of the objective function in the given time interval and, in this sense, they may be judged as more reliable.

Universality and flexibility. The mathematical formulation of the business problem is usually only some approximation of the real business problem itself. Therefore, we often vary the formulation of the optimization problem within some limits. Even minor changes can make methods of regular optimization infeasible. For example, incorporation of nonlinearity even into a single constraint or into the objective function may lead to violation of the basic underlying assumptions of LP- and MIP-based algorithms. In contrast, the metaheuristic approaches are normally robust to such types of changes: minor changes in the problem formulation normally require only minor changes in the algorithm.

Scalability. Real business and manufacturing settings are never static. The dimension of the problems to be solved tends to increase as the business matures. The methods of regular optimization, like LP and MIP, are characterized by non-well-predictable computational complexity. Moreover, this complexity may have strong dependence on the dimensionality of the initial optimization problem: high-order polynomial or even exponential. This means that a moderate increase in the dimensionality of the problem, e.g. due to opening new distribution centers, shops, or transportation options, can prevent finding the solution. In contrast, metaheuristic approaches are usually characterized by approximately linear dependency of their computational complexity on the dimensionality. This makes them much more easily scalable as compared to their regular counterparts.

The following table summarizes the consideration on comparison of the two groups of approaches to optimization:

| Characteristic | Regular approaches | Metaheuristic approaches |

| Mathematical rigor | + | – |

| Availability of solvers | + | – |

| Reliability | – | + |

| Universality and flexibility | – | + |

| Scalability | – | + |

Which approach to use: Regular or metaheuristic?

A simplified answer to this question follows. We try to use regular approaches as long as they are capable of providing the optimal solution within the given time and have some practically reasonable reserve for the growth of dimensionality. But when the dimensionality is too high for regular methods, we develop and use metaheuristic approaches to optimization. In other words, the regular methods are preferable due to their mathematical rigor and capability of finding the globally optimal solution. Only when the regular approaches fail – mainly for reasons of high dimensionality of the problem – we use the metaheuristic ones.

It is necessary to bear in mind here that the overall dimensionality of the initial problem is not necessarily the major criterion to make a decision. Some problems of high initial dimensionality may still be decomposed into many problems of essentially lower dimensionality. In such a situation, it is usually reasonable to start from regular methods of optimization, applying them to each sub-problem separately. Unfortunately, many practically interesting problems do not succumb easily to such a decomposition.

Order and inventory sourcing decisions optimization: Conclusions

Optimization of sourcing decisions in networks with multiple SKUs, DCs, and carriers often face the challenge of high dimensionality. As we have shown above, even a business of a moderate scale can easily meet the necessity to solve an optimization problem with, say, 1 billion variables. Rigorous mathematical methods, like mixed integer programming, normally fail when the dimensionality exceeds some level. We overcome this challenge in our solutions via application of tailormade metaheuristic algorithms for stochastic optimization, e.g. simulated annealing. We have shown that for low-dimensional problems these algorithms are capable of approaching the global optimum quite closely. For high-dimensional problems, when regular methods do not work and the global optimum is not known in principle, metaheuristic algorithms provide significant reduction of the objective function in a practically acceptable time.

References

- E.Munapo & S.Kumar, Linear Integer Programming: Theory, Applications, Recent Developments, 2021

- X.-S.Yang, Introduction to Mathematical Optimization: From Linear Programming to Metaheuristics, 2008